With a tinge of jealousy, I’m sitting on the couch this morning watching the keynote for Re:Invent...

AWS re:Invent 2023 - Adam Selipsky Recap - AI & Data take Centre Stage

I thought this was the year I’d return to Vegas for AWS Re:Invent but with a major project go-live due in a day's time, it is instead an early morning in the office in NZ to watch the livestream.

It wouldn’t be the CEO keynote without a hype reel and a band playing - Sweet Child of Mine this time - as the stage is set. Que the entry of the sports jacket, jeans and sneakers… and CEO Adam Selipsky. I do wonder if the ongoing AWS sneaker look will age well.

The first in-depth piece talks about the growing partnership between AWS and Salesforce, in particular some collaboration around Einstein which is Salesforce’s AI assistant. Moving into more general customer examples, Selipsky calls out that over 80% of unicorns (companies with over a billion-dollar valuation) run on AWS.

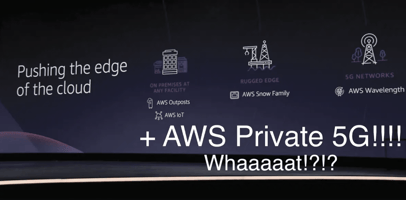

Shifting a bit to how AWS is differentiated from other providers and how as a company they continually “Reinvent”. We head infrastructure and stats chat. AWS now has 32 regions globally and 5 or so more, with 3 or more AZs up to 100 kms apart. The comparative stats according to AWS are that they have 3 x more data centres, 60% more services and 40% more features than their largest competitor.

We pivot to storage and the first announcement:

Announcement: S3 Express One Zone

AWS’s S3 Express One Zone is the highest performance and lowest latency cloud object storage. Express One is 10 times faster and has 50% lower access costs than standard S3. Pinterest has seen 10x faster write speed and a cost reduction of 40% for AI workloads.

Announcement: Graviton4 and R8g instances for EC2

Graviton4 and R8g instances for EC2 are the fourth iteration of AWS’s own chipset which has 30% more cores than Graviton3 and is claimed to be 40% faster for database applications. R8g EC2 instances are the first available with the new chipset

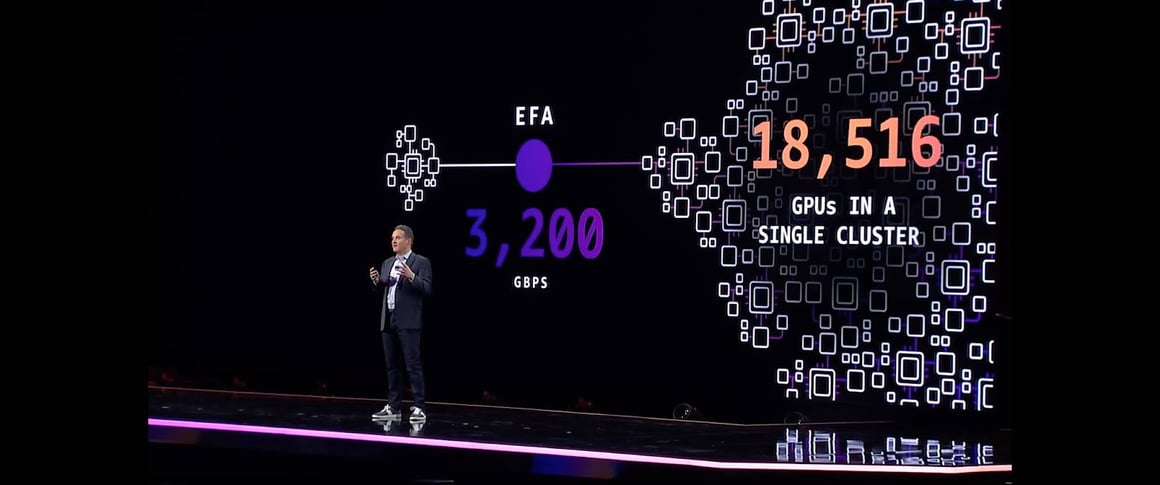

We shift into talking about generative AI - which we can only expect will be a huge talking point across all the keynotes and announcements. AWS thinks of GenAI as three layers, all of which they are focusing on. These are firstly the infrastructure for training Foundation Models (FMs) and Large Language Models (LLMs), secondly, the tools to build and access the large language modes and FMs, and finally the applications that leverage those FMs. Starting with the infrastructure layer, the presentation shifts to the GPUs that are used to power AI. Customers can scale up to 20,000 GPUs in a single cluster in AWS which is all built on the Nitro hypervisor. Enter NVIDIA CEO Jensen Huang and more sneakers, this time paired with a leather jacket which feels somewhat more appropriate.

NVIDIA is announcing a new GPU available on AWS called the GH200. Using GH200 the tensor interference time improved by a factor of 4. GH200 connects a CPU and GPU together using NVLink which allows each chip to directly access the others' memory. NVIDIA is also bringing DGX cloud to AWS - which is their own AI “workshop” platform. There is a whole lot of talk about flops. Good flops though like gigaflops and exaflops. Maybe skipping over the details, if you look at the amount of new data centres being built, a large driver for this is the extra computing power needed for AI. AI needs lots of computing, and computing needs lots of data centres.

New Zealand is an interesting place to consider for these sorts of workloads that may not necessarily require low latency to the end user. We have the benefit of readily available renewable energy which meets cloud providers’ green ambitions, we are very stable geopolitically and we are relatively simple to do business with. We have CDC, NextDC, DCI and Microsoft building data centres around Auckland (not to mention the government DC planned for Whenuapai) and potentially another data centre going into Invercargill. We have hyperscalers AWS, Microsoft and Google all with plans to establish cloud regions in NZ.

Announcement: EC2 Capacity Blocks for ML

EC2 Capacity Blocks for ML allows customers to reserve EC2 Ultraclusters to allow them to ensure they will have capacity available when they need it, but not pay for it being available when they don’t.

AWS Trianium is the purpose-built chip for training and AWS Inferfetia is their purpose-built chip for inference. These chips are designed to provide the best price performance for AI models.

Announcement: AWS Tranium2

AWS Tranium2 with 4x faster performance than the first purpose-built chip.

AWS Neuron is the AWS SDK to build training and inference pipelines to optimise machine learning on Trainium and Inferentia. Sagemaker is AWS’s tool to enable developers to create, train and deploy ML models. We’re now talking about the middle of the AI stack and Amazon Bedrock which helps to build and scale Gen AI applications by using industry-leading Foundational Models from a number of providers, and then customise these for your own data.

Everyone is moving fast and the ability to adapt matters. Choice matters, we are told. You need to be able to switch models, combine models and have a choice about providers of models. The events of the last 10 days have highlighted this (Hi Sam Altman from Adam!). With AWS you can switch model providers with an API call.

Amazon Titan Models are Amazon’s own foundation models which they have built themselves. Interestingly they will indemnify customers from IP infringement claims when using their Titan models.

Announcement: AWS Bedrock

There are a range of new Bedrock features I won’t go into. These include:

- Fine Tuning

- Retrieval Augmentation Generation (RAG) with Knowledge Bases

- Continued Pre-training for Amazon Titan Test Lite & Express

- Agents for Bedrock to enable multi-step processes

- Guardrails for Bedrock

With Agents for Bedrock, this allows you to create an Agent using the following steps:

- Select your FM.

- Provide basic instruction (i.e. you are a friendly support assistant)

- Specify the lambda functions for API calls

- Select relevant data sources.

- Create the Agent.

Agents (somewhat confusingly) is not customer-facing technology, instead, gen-AI apps that provide self-service will use Agents.

We’re talking more about security now with Guardrails for Bedrock which allows you to simply configure controls such as harmful content filtering and restricting access to personally identifiable information.

The conversation shifts towards coding. Amazon CodeWhisperer provides AI-powered code suggestions in the IDE, with a number of businesses now using it. AWS has made CodeWhisperer free for individual use.

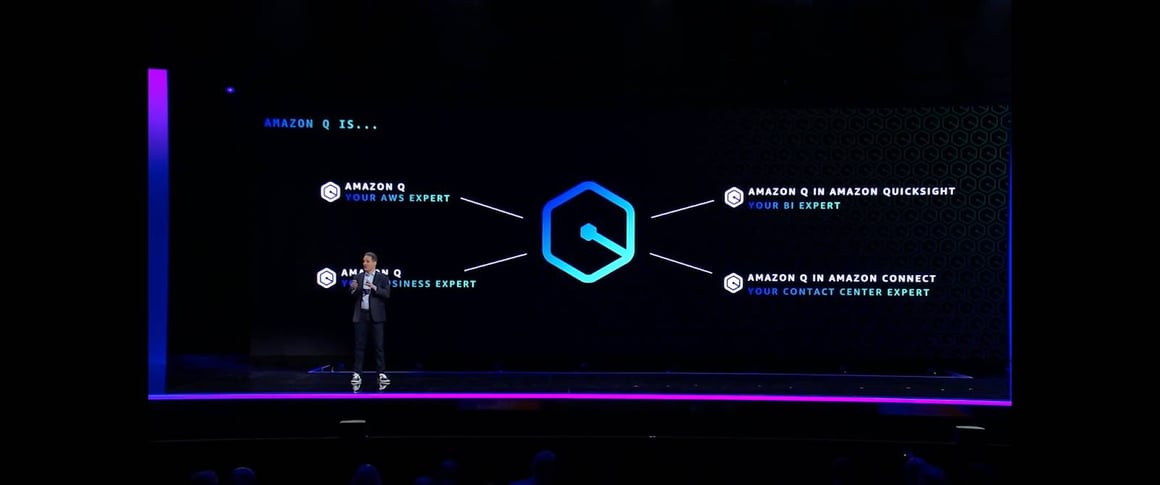

Announcement: Amazon Q

Amazon Q is a generative AI assistant for work that is tailored to your business. Q understands existing roles and permissions and provides a natural language expert assistant.

Q is targeted right now at four use cases:

- Help developers and builders in AWS

- Help people at work

- Help with business intelligence

- Help with customer service.

Q is available for building on AWS. Q can help with architecture, troubleshooting and optimisation.

Q will also be available in the IDE for developers and will assist in drafting, reviewing and publishing code. Amazon Q Code Transformation assists with code upgrades. This currently supports Java upgrades but will soon support transforming .Net from Windows to Linux.

Q is also a business expert connecting to a number of data sources including Salesforce, Google Drive, Microsoft 365 and ServiceNow to name a few.

You can configure Q for your business in three steps

- Configure Q with details of your own organisation and connect it to a data source

- Once connected Q starts indexing data and content and understanding business semantics

- Open Q in your browser and start querying.

You can also upload files like CSVs on the fly and add business context to help improve results. Q respects existing roles and permissions.

The demo of this actually looks really good - very simple to set up and a number of manners to continue improving the results. One thing that has been missing from a number of AI assistants that have been announced to date (in particular within productivity apps) is their ability to reach beyond the productivity suite and understand other business data.

Announcement: Amazon Q in Quicksight

Amazon Q in Quicksight provides Q functionality within Quicksight to interrogate data but also produce and manipulate dashboards. I’ve separated this from the general Q announcements above as this is quite domain-specific. Q for Quicksight can also go further and produce a full monthly performance report including commentary.

Amazon Q in Amazon Connect enables Q to sit in on AWS Connect calls and perform a number of functions using natural language processing.

We now are shifting to talking about data which starts with a talk from BMW. I just heard “We update 6 million cars over the air” - while not new now, it’s quite cool to hear about this progression from more car manufacturers. To get the most out of your data we are told that you need a comprehensive set of data services and data to be integrated and governed.

Moving into the data services first we talk about all the various data services available before moving to an announcement about new integrations.

Announcement: Zero-ETL

Zero-ETL integrations between data services including:

- Redshift with Aurora PostgreSQL

- Redshift with RDS for MySQL

- Redshift with DynamoDB

- DynamoDB with Amazon OpenSearch

Announcement: Datazone AI

Amazon Datazone AI recommendations generate a business context for your data catalogues.

Amazon project Kuiper (pronounced ky par by ) is building a low earth orbit satellite network for portable broadband around the globe. Imagine Starlink. But with an Amazon logo. But wait, Kuipar will also provide private enterprise network services rather than just public Internet - which I guess is useful so you don’t have to deploy additional SD-WAN equipment or similar to create a private overlay network. If they are achieving this through their own encrypted overlay it could also pave the way for their own SD-WAN edge offering to integrate with AWS Cloud WAN. It is worth noting that with AWS private 5G, Cloud WAN and the coming LEO satellite service, their telco-type offerings sure are growing.

And that's it. Apart from a man called Zak/Zack/Zac playing a guitar. I wouldn’t call that the most earth-shattering Reinvent CEO keynote, but there were some solid developments around the use of AI both to simplify the use of AWS services and to provide direct business services.