A quick disclaimer: We are not developers, we are ‘integrators’. Our job is to watch the market to...

Serverless computing vs containers

Serverless computing and containers are application development models that have grown immensely popular in the world of DevOps. When used correctly, they can make application development significantly more cost-effective and faster. Although picking your technology approach before you begin building or scaling your applications is important, both models have their places and neither is technically better than the other. In this blog, I will compare the similarities between each technology and explain which one you might want to consider based on your needs.

What are containers?

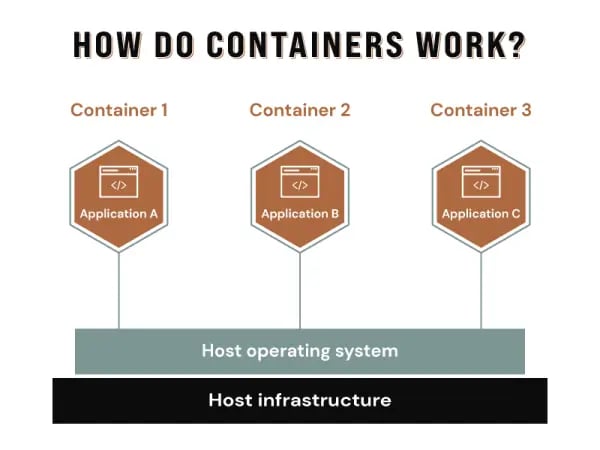

Containers are lightweight, portable virtualisation architectures that contain all the dependencies a single application needs to function properly. These containers share resources with their host system, rather than emulating an entire operating system, which makes them more efficient than a virtual machine. Containers can be packaged up, separated from their underlying infrastructure and deployed consistently across other environments, including physical on-prem servers, in the Cloud or via a managed service.

A containerised application can also be constructed via multiple containers, each executing a specific function for the application. For instance, one application could include a web server, an application server and a database, all separated into specific containers running simultaneously. Containers are naturally stateless, meaning they cannot store information beyond the container’s lifetime as their ‘state’ is lost as soon as the container is deactivated.

How do containerised applications work?

Container pros & cons

Benefits of using containerised applications:

- Greater configuration & controls

- Vendor-agnostic

- Supports any programming language as long as the host server supports it

- Easier migration path

- Portable, can be deployed in most environments

Drawbacks of using containerised applications:

- Requires significant administration & manual intervention

- Limited supported environments (Linux & some Windows)

- Slower scaling than alternatives

- Higher running costs (infrastructure & personnel)

- Harder to get started

What is serverless computing?

Serverless computing is a method of writing, hosting and executing code via the Cloud, although some rare exceptions use on-premise infrastructure. It essentially enables developers to focus on writing and deploying applications rather than concerning themselves with the infrastructure that underpins them, as the servers are managed entirely by a third-party vendor. We recently covered this in detail in our article: ‘What is serverless computing?’.

Serverless pros & cons

Benefits of using serverless applications:

- Quicker to get started/quicker to deploy code

- Only pay for what you use and reduce wasted spending on idle servers

- Auto-scaling & dynamic resource allocation

- Simplified backend coding with Function-as-a-Service (FaaS)

- Significantly reduced administration & maintenance burden

Drawbacks of using serverless applications:

- No standardisation

- ‘Black box’ environment makes it difficult to debug & test

- Cold starts for dormant functions

- Language must be supported by the Cloud vendor

- Complex applications can be difficult to build

- Maximum of 10-minute runtime

- Vendor lock-in

What are the similarities between containers and serverless computing?

Although containers and serverless are different technology they do share several essential characteristics. Both models enable developers to write, host and execute code, separating applications away from host infrastructure, and with the right tools, both approaches can automatically scale as workload increases. Containers and serverless are also able to achieve all of this with fewer overheads and less complexity than virtual machines, but that is where the similarities end.

What are the differences between containers and serverless computing?

Containers |

Serverless |

|

| Hosting | Containers can be hosted on certain versions of Windows as well as any modern Linux server. | Serverless must be run on specific host platforms with most in the Public Cloud (like AWS Lambda, Azure Functions or Google Cloud Functions). |

| Configuration & Admin | You’ll either need to manage your own on-premise infrastructure for containers or use a fully managed container orchestration service like Amazon ECS. | You’ll need to use the platform provided by the Cloud vendor to configure your serverless infrastructure & applications. You may also need to modify your code if you change Cloud providers. |

| Testing & Debugging | As you have access to the backend infrastructure, debugging and testing application code in containers before deploying to production shouldn’t be an issue. | The backend of serverless applications is essentially a ‘black box’ as they belong to the Cloud partner. This lack of visibility makes debugging and testing code before deploying it difficult. However, cloud service emulators like LocalStack are making it possible to test code before deploying it. Although LocalStack only supports AWS as of writing this article. |

| Programming Languages | Containerised applications can be written in any code as long as the server hosting that container supports that language. | You’ll need to code in a language that is supported by your Cloud vendor. However, providers are forever growing their list of supported languages. |

| Runtime | Containers can execute for prolonged periods. | Serverless functions have a limited execution runtime of ten minutes or less. If your applications take longer than this then you will need to consider another solution. |

| Availability / Cold Starts | Depending on the underlying infrastructure, containerised functions should be constantly available. | Serverless functions that have become dormant due to inactivity can be shut down by the Cloud partner to save resources. When the function is next called upon it will need to be restarted before executing its function, potentially causing latency between the server and user. This is referred to as a ‘cold start’. |

| Statefulness | Containers are naturally stateless as they cannot store information beyond their lifetime. However, with the right tools, creating stateful containerised applications is possible. | Although some providers offer limited support for stateful services, most serverless computing platforms are designed to be stateless. Serverless applications can be connected to external storage services but the functions themselves are stateless. |

| Cost & Vendors | Although there are plenty of free, open-source container engines and orchestrators out there, you’ll need to pay for the provisioning and maintenance of the underlying infrastructure which may also include personnel. You’ll also be paying for the server infrastructure regardless of whether the containers are in use or not. | With serverless, you’ll only pay for the infrastructure you use, reducing wasted spending on idle servers. As the vendor manages the infrastructure, you’ll also save on overheads as you won’t need to hire infrastructure engineers or other specialists. As a Cloud vendor will be managing your infrastructure, be aware that your business may become reliant on them and locked in contractually. |

When should you choose containers or serverless?

Before we discuss when you should use containers or a serverless platform, it’s important to note that you can use both. Many organisations will deploy serverless technologies with containers to assist with delivering a single application or product. Containers and serverless computing can complement one another as gaps one technology leaves can be bridged by the other and vice versa. That said, there are a few common use cases where one technology may outperform the other. If you’re looking to build a complex application that will require a considerable amount of configuration, flexibility and control, then a container may be the better choice. On the other hand, if you’re building a lightweight application that will need to launch quickly or scale at a moment’s notice then you may want to consider a serverless provider. If you would prefer to avoid managing infrastructure at all costs, then serverless would be the logical option. Now, this is a gross oversimplification because no application is coded equally so I recommend you speak to a DevOps expert before committing. Check out this article if you’re looking for a more comprehensive breakdown of the key traits of serverless computing. I hope this article has been helpful.